BERT is a language model developed by Google that uses a transformer architecture to understand the context of words in a sentence from both directions, left-to-right and right-to-left. It is pre-trained on a massive amount of text data and can then be fine-tuned for various specific tasks with minimal effort. This makes it highly effective for applications like improved search queries, sentiment analysis, and question answering.

Key characteristics

Bidirectional training: Unlike older models that processed text in a single direction, BERT analyzes words in the context of the entire sentence, from both the left and the right.

Pre-training and fine-tuning: BERT is first pre-trained on general language patterns. Developers can then "fine-tune" it with a small amount of task-specific data to make it perform well on new tasks like sentiment analysis or question answering.

Transformer architecture: It is built on the transformer model, which uses a mechanism called "self-attention" to weigh the importance of different words in a sentence.

Auto-encoding: It uses a type of auto-encoding language model to create a vector representation for each word based on its contextual information.

Common applications

Search engines: It helps search engines understand the context of user queries, leading to more accurate results.

Question answering: It can find the specific span of text that answers a question within a larger document.

Sentiment analysis: It can determine the emotional tone (positive, negative, or neutral) of text, such as customer reviews.

Text classification: It can categorize text into different groups.

Named Entity Recognition (NER): It identifies and categorizes entities like names of people, organizations, and locations within a text.

Chatbots and virtual assistants: It enables more natural and context-aware conversations.

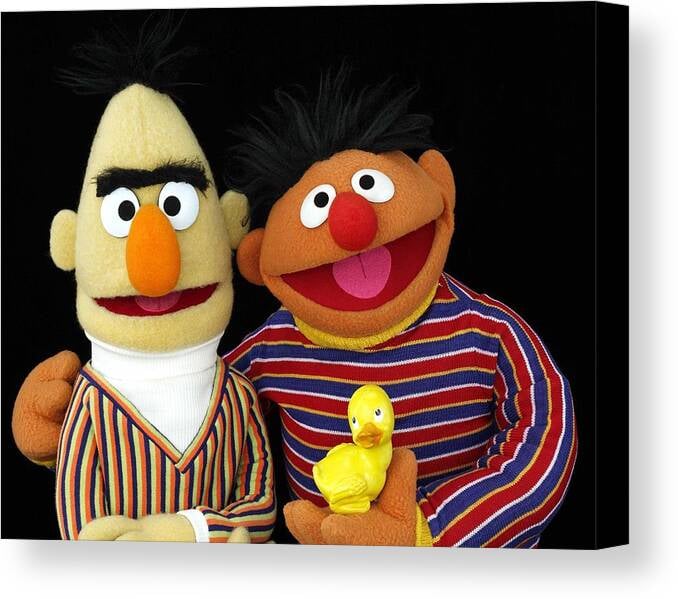

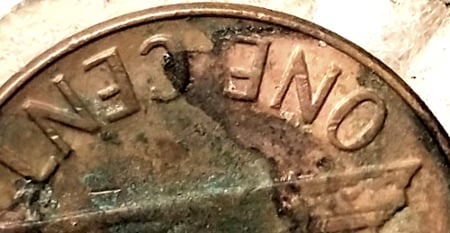

.Oh,my !................................

"The BERT label feature a

variety of designs

& themes to enhance

the presentation

of different

coins and sets."

Comments

@Catbert

@Coinbert

Maybe these guys know?

Nothing is as expensive as free money.

They are a what knot seller (trader bea) and that is their special label they get.

Positive BST as a seller: Namvet69, Lordmarcovan, Bigjpst, Soldi, mustanggt, CoinHoader, moursund, SufinxHi, al410, JWP

I think there’s a much better chance that Ernie knows.

Mark Feld* of Heritage Auctions*Unless otherwise noted, my posts here represent my personal opinions.

Apparently BERT is a sticker made by the seller TraderBea, who sells on whatnot.

https://traderbea.com/bertchase/

Prior version:

https://forums.collectors.com/discussion/1106320/anyone-know-what-these-pcgs-trader-bea-holders-are-promotional-item-dealer-bulk-sub

Seems like their marketing is working given how many threads there have been about it here.

Nothing is as expensive as free money.

Sorry. Only know BART:Bay Area Rapid Transit.

BERT is a language model developed by Google that uses a transformer architecture to understand the context of words in a sentence from both directions, left-to-right and right-to-left. It is pre-trained on a massive amount of text data and can then be fine-tuned for various specific tasks with minimal effort. This makes it highly effective for applications like improved search queries, sentiment analysis, and question answering.

Key characteristics

Bidirectional training: Unlike older models that processed text in a single direction, BERT analyzes words in the context of the entire sentence, from both the left and the right.

Pre-training and fine-tuning: BERT is first pre-trained on general language patterns. Developers can then "fine-tune" it with a small amount of task-specific data to make it perform well on new tasks like sentiment analysis or question answering.

Transformer architecture: It is built on the transformer model, which uses a mechanism called "self-attention" to weigh the importance of different words in a sentence.

Auto-encoding: It uses a type of auto-encoding language model to create a vector representation for each word based on its contextual information.

Common applications

Search engines: It helps search engines understand the context of user queries, leading to more accurate results.

Question answering: It can find the specific span of text that answers a question within a larger document.

Sentiment analysis: It can determine the emotional tone (positive, negative, or neutral) of text, such as customer reviews.

Text classification: It can categorize text into different groups.

Named Entity Recognition (NER): It identifies and categorizes entities like names of people, organizations, and locations within a text.

Chatbots and virtual assistants: It enables more natural and context-aware conversations.

Proud follower of Christ!

.......................................

"The BERT label feature a

variety of designs

& themes to enhance

the presentation

of different

coins and sets."

A confusing thread for a coin site. I assumed Bert meant the inner letters of LI BERT Y. James

Bert was a great hobo nickel carver